Docker has just released a new build of Docker Desktop for Mac users, featuring an exciting new AI capability called "Docker Model Runner". This feature allows you to run large language models (LLMs) directly on your MacBook as easily as running a Docker container.

As someone who isn’t a huge fan of AI and doesn’t consider themselves an expert in the field, I approached this feature with some skepticism. However, as a Docker Captain, I had the opportunity to test an early build of Docker Desktop with this functionality. My opinion changed dramatically after I gave it a real-world test during a train journey across the country, where I had poor internet connectivity. Luckily, I had pre-downloaded a few LLMs onto my MacBook and decided to give them a try. The results were nothing short of impressive.

Getting Started with Docker Model Runner

To use Docker Model Runner, you’ll need to ensure you’re on a supported version of Docker Desktop. This feature is available starting from v4.40 and is currently supported only on Apple Silicon (M1/M2/M3/M4) Macs.

Enabling Model Runner

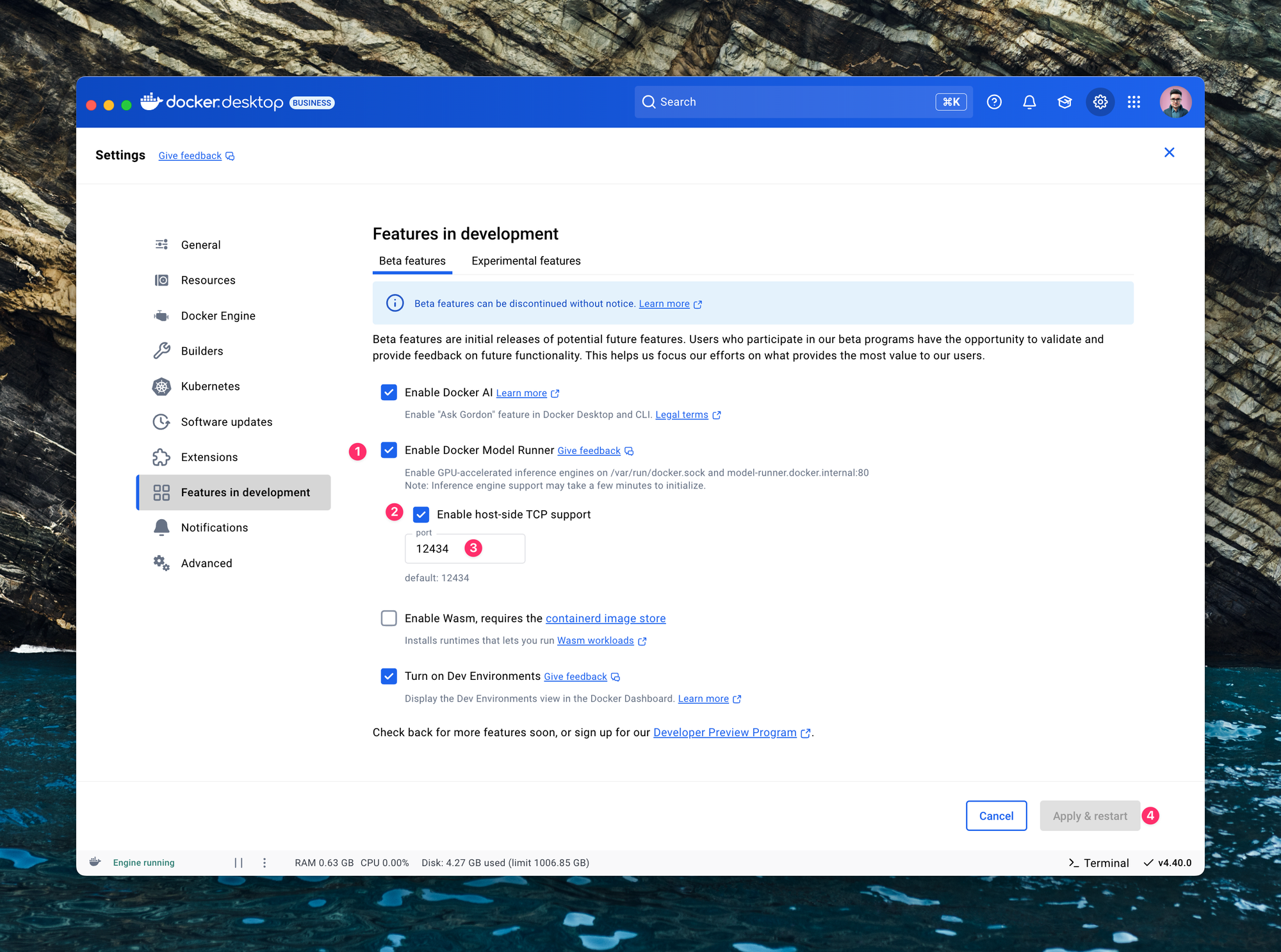

- Open Docker Desktop.

- Navigate to:

Settings → Features in Development → Beta Features → Enable Model Runner - Click Apply & Restart to activate the feature.

Using the docker model CLI

After enabling the feature and restarting Docker Desktop, you can interact with the Model Runner using the docker model command-line interface (CLI). Here are some useful commands:

- Check the status of Model Runner:

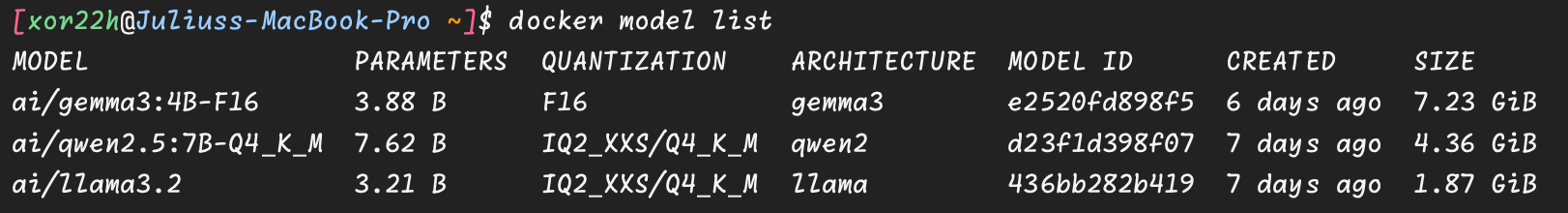

docker model status - List available (downloaded) models:

docker model list

Downloading models

Pull models from Docker Hub under the ai namespace

docker model pull ai/llama3.2

docker model pull ai/qwen2.5:7B-Q4_K_M

docker model pull ai/gemma3:4B-F16Once downloaded, you can run these models locally in either single-question mode or interactive chat mode.

Examples:

- Ask a single question:

docker model run ai/llama3.2 "Hello" - Start an interactive session:

# Start interactive sessions

docker model run ai/llama3.2

Interactive chat mode started. Type '/bye' to exit.

> Hey

How can I assist you today?

> /byeConfiguring Docker Model Runner inside Zed Editor

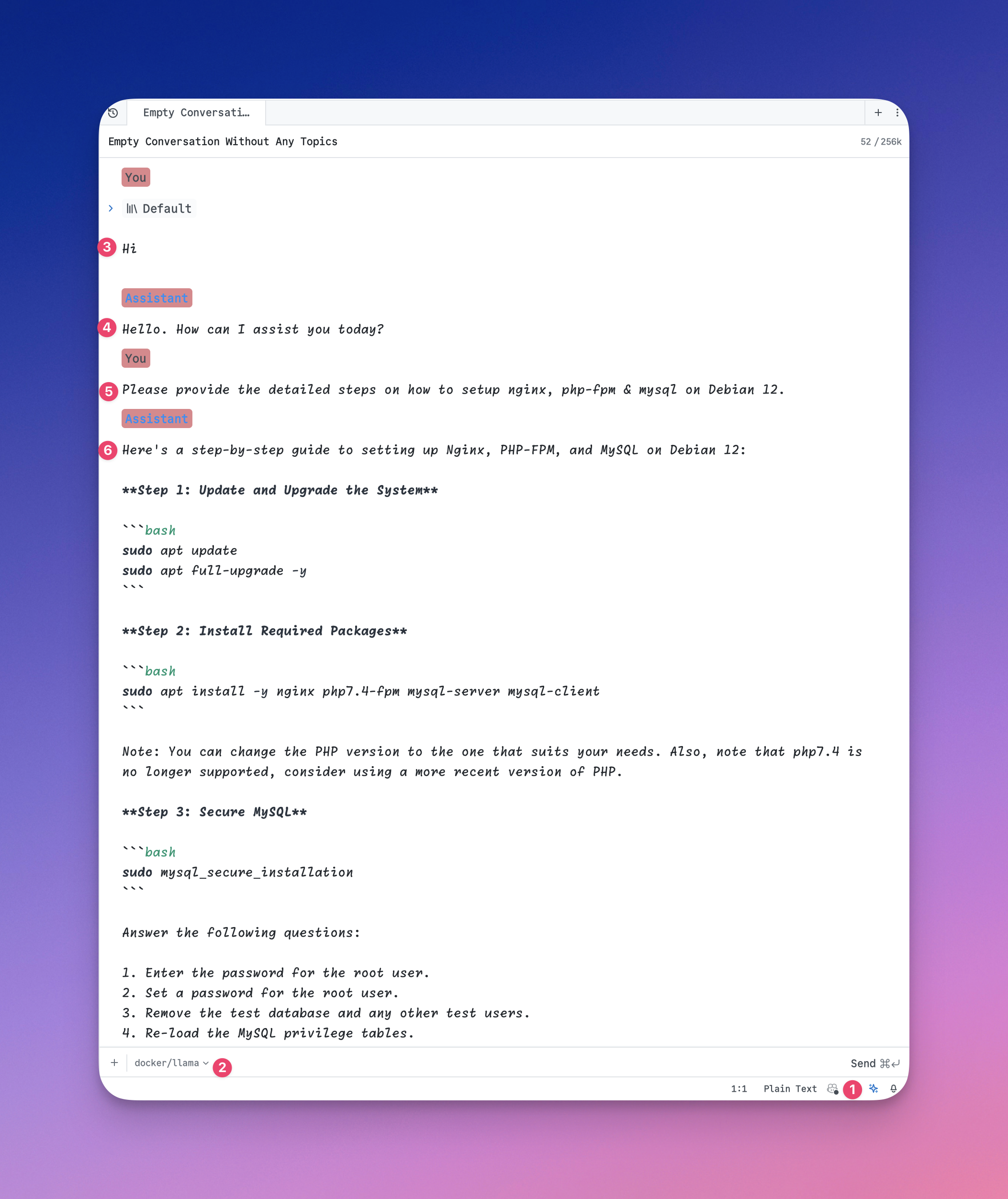

Docker Desktop also allows you to expose an OpenAI-compatible API on your local network, enabling seamless integration with third-party tools like Zed Editor.

First, open Zed Editor settings.json and add these lines.

{

"language_models": {

"openai": {

"api_url": "http://localhost:12434/engines/llama.cpp/v1",

"version": "1",

"available_models": [

{

"max_tokens": 256000,

"name": "ai/llama3.2",

"display_name": "docker/llama"

},

{

"max_tokens": 256000,

"name": "ai/gemma3:4B-F16",

"display_name": "docker/gemma"

}

]

}

},

}The main settings here are:

- api_url which is http://localhost:12434/engines/llama.cpp/v1

- available_models – array of models you want to be able to use inside the editor. Note that models must be pulled in advance, otherwise, they won't work, and won't be pulled automatically.

- name - the name of the model you pulled.

- display_name - A user-friendly name for display purposes.

- max_tokens - Set this based on your model's capacity (e.g., most handle up to 128k or 256k tokens).

Finally, in Zed Editor’s AI settings, set up an OpenAI API key (any string will suffice for Docker Model Runner).

And here - it's a an example conversation:

Real-World Use Case

During my train journey, I used Docker Model Runner as a coding assistant without internet access. While working on a program to synchronize data between Shopify and accounting software, the LLM provided clear explanations of my codebase and suggested improvements for logic and security. Even on my MacBook Pro with an M2 chip and just 16GB of RAM, the performance was fast and smooth.

How does the Model Runner work?

With Docker Model Runner, the AI model DOES NOT run in a container. Instead, Docker Model Runner uses a host-installed inference server (llama.cpp for now) that runs natively on your Mac rather than containerizing the model.

1. Host-level process:

- Docker Desktop runs

llama.cppdirectly on your host machine - This allows direct access to the hardware GPU acceleration on Apple Silicon

2. GPU acceleration:

- By running directly on the host, the inference server can access Apple's Metal API

- This provides direct GPU acceleration without the overhead of containerization

- You can see the GPU usage in Activity Monitor when queries are being processed

3. Model Loading Process:

- When you run docker model pull, the model files are downloaded from the Docker Hub

- These models are cached locally on the host machine's storage

Models are dynamically loaded into memory by llama.cpp when needed

Limitations

While Docker Model Runner is promising, there are some limitations to be aware of:

- Currently supported only on Apple Silicon (Windows support is planned for future releases).

- No CLI support yet for publishing models as OCI artifacts.

- No hardware validation for large models—running oversized models may freeze your system (this validation is on the roadmap).

Summary

Docker Model Runner offers an easy way to run LLMs locally without requiring internet access (once models are downloaded). It enhances security by ensuring that none of your conversations or files are shared with external services. Whether you're offline or concerned about privacy, this feature is a game-changer for running AI workloads directly on your machine.

Comments ()