We Are Developers: Docker Offload, Docker Model Runner & more...

Today, At We Are Developers conference in Berlin, Docker Inc. announced a lot of news, which should be interesting for developers building AI based apps. (and not only them)

In short

- Docker Offload - A single command to switch between your local development environment and cloud resources, granting you access to powerful GPUs.

- Collaboration with Google Cloud - enabling easy deployment into production - directly from your

compose.yaml- the same one you are using on your machine. No need for Kubernetes manifest or complex terraform/ansible/etc... - Open-sourced Docker Model Runner & MCP Gateway

Docker Offload

Power of cloud meets your daily tools

The Docker Offload is a great feature, especially if you have an older machine with a limited amount of memory or an older GPU. Running AI models locally requires huge amounts of memory and really powerful GPUs.

With my own M2 MacBook and 16GB of RAM, I was able to run smaller versions of Gemma3 or Qwen. I actually have another post about it here:

The problem: That was the first time since I bought this machine where I heard it screaming with fans running at full speed. It drains the battery and makes your computer so hot that you don't want to hold it on your laps anymore.

Docker Offload comes to the help - allowing you to switch into the cloud with a single command in a few seconds, giving you around 16GB of memory, NVIDIA L4 GPU with 24GB VRAM, and a fast network connection. (It's based on AWS g6.large for now – I've verified this using AWS Instance Medatada endpoint)

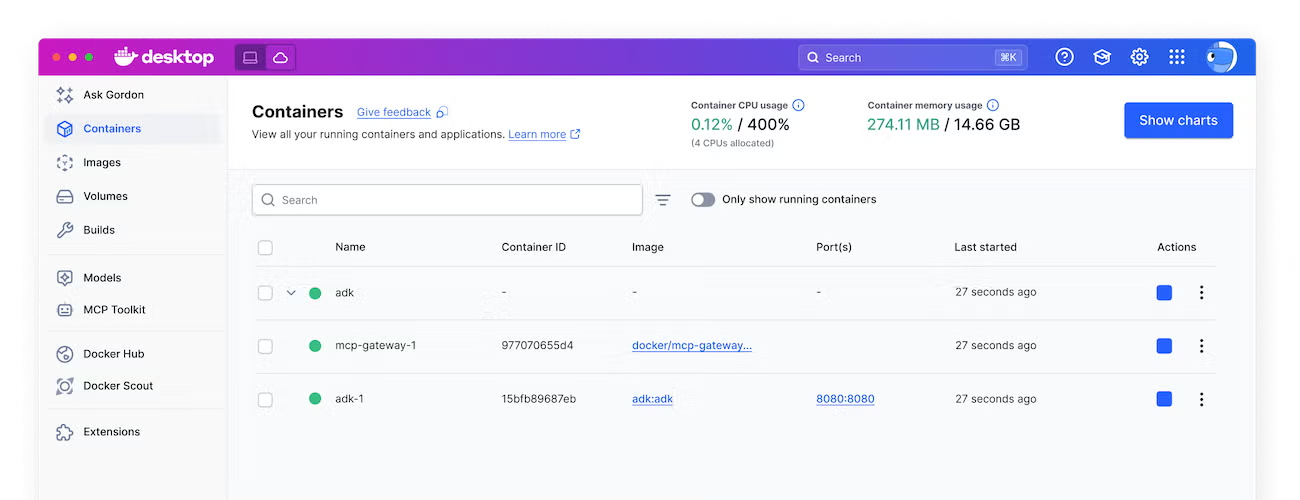

QuickStart

You'll need Docker Desktop 4.43 or later. You can go over the Docker Offload quickstart as well, but in a nutshell, it is just a matter of:

docker offload start

You can also enable it on the Docker Desktop UI, with a simple toggle at the top.

That’s it. You can now use your usual flows, perform Docker image builds, run Docker Compose stacks - all with the power of the cloud.

As an example, I've just spinned the OpenWebUI with a larger model.

services:

webui:

image: ghcr.io/open-webui/open-webui:main

ports:

# expose port for web interface

- "8080:8080"

environment:

- OPENAI_API_KEY=not-really-needed-for-docker

- WEBUI_AUTH=False

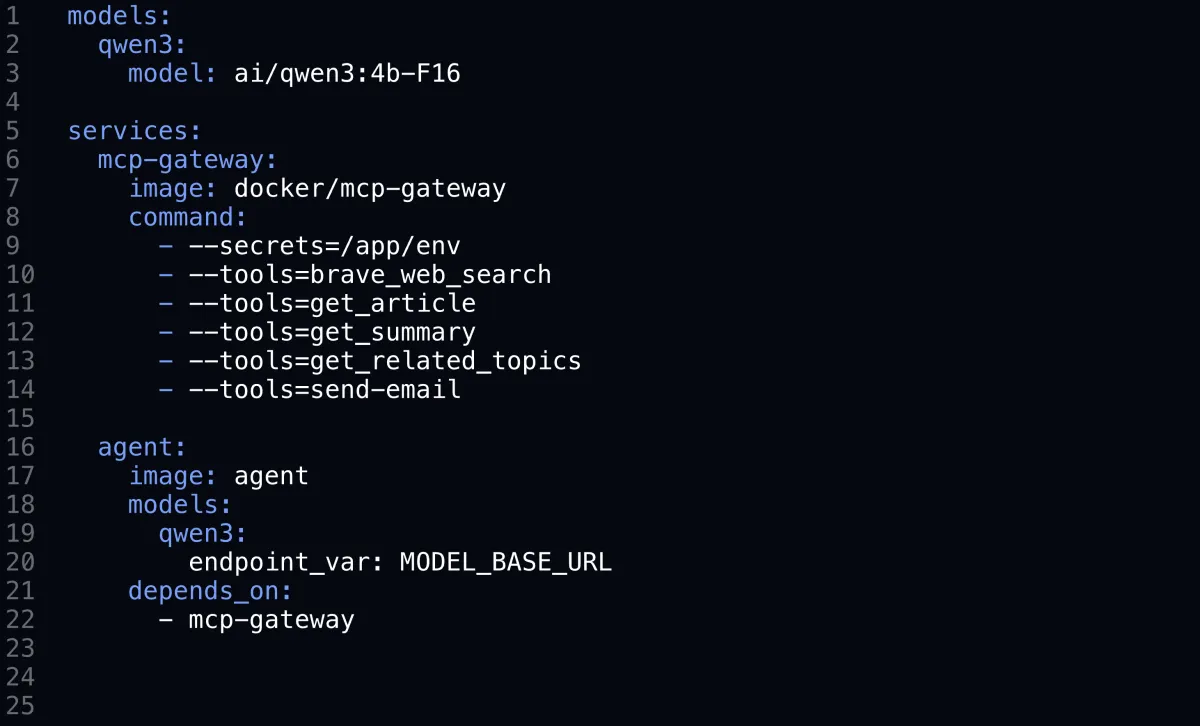

models:

gemma3:

endpoint_var: OPENAI_API_BASE_URL

models:

gemma3:

model: ai/gemma3-qat:12B-Q4_K_M

A simple docker compose up -d - and it's done. Without zero load on my personal machine melting everything up. And the best part – the port-forwarding works seamlessly directly on your machine, just open http://localhost:8080/. Isn't that great?

More advanced examples leveraging the power of Docker Offload can be found in this GitHub repository:

I’ve stumbled upon something that’s not working - Building Cross platform image - is not supported yet. For me, this is a big deal because it meant I could build everything with the power of the cloud, which seemed like a huge advantage.

Docker Offload is a paid feature that offers surprisingly affordable pricing. Users can pay as little as $0.01 per minute, or $0.015 per minute with GPU support. This equates to less than a dollar per hour, utilizing the full power of a GPU.

Considering an eight-hour workday, multiplied by twenty working days, this pricing remains competitive with Claude Max’s plan or other AI agents, which typically charge $200 per month with additional limitations on token usage.

Furthermore, Docker automatically deletes these environments after thirty minutes of inactivity to prevent unexpected billing.

Docker provides a complimentary thirty-minute trial period for all registered users. There is no reason to delay signing up, what are you waiting for?

Docker & GCP

Simplify AI app deployment with Cloud Run and Docker Compose

The next big announcement - Google Cloud Run now supports compose.yaml. Making the path from development, to full production enviroment simple as one command:

gcloud run compose up

For me, as a DevOPS person - that is really big thing. Didn't had a chance to try it yet, but from the demo I saw – it was impressive. Read more below:

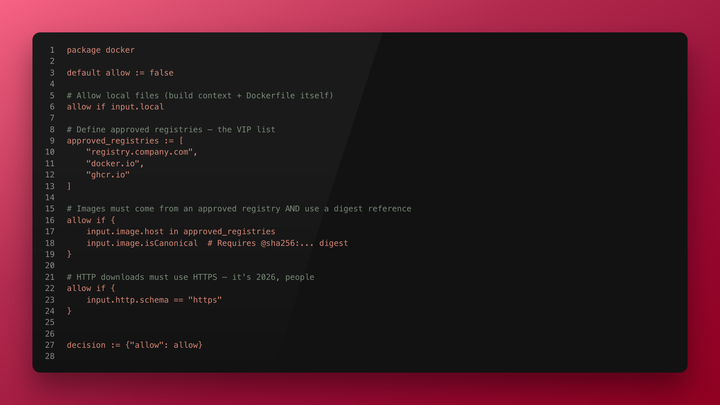

MCP Gateway & Model Runner

Two important pieces - open-sourced

Lastly, the Docker Model Runner - the heart of Docker & AI Inference and MCP Gateway - both got open-sourced and are available at GitHub.

We all adore the open-source community, and I genuinely believe this is a fantastic move by Docker Inc.

As a developer myself, I absolutely love exploring the codebase, especially when it’s written in Go. I learn from it, and if I find a chance, I even contribute back. We all grow when we get to see how something is made, and it makes us feel more comfortable knowing what’s behind all the “magic.”

Missed the keynote? Watch the recording on here:

Comments ()